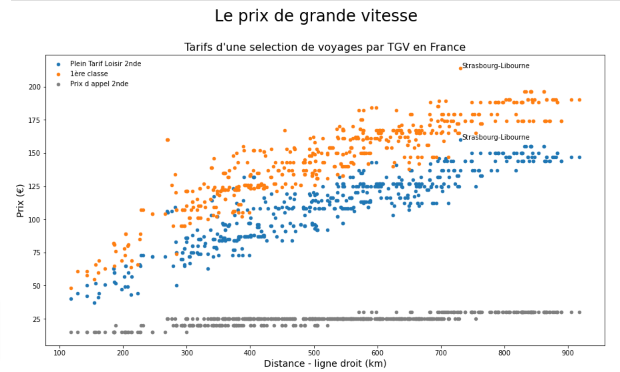

Le prix de grande vitesse

Le prix de grande vitesseBack in October Tom Forth tweeted about the rich amount of open data published by SNCF, compared to railways in Britain, and seemingly quickly produced the chart below, comparing tariffs…

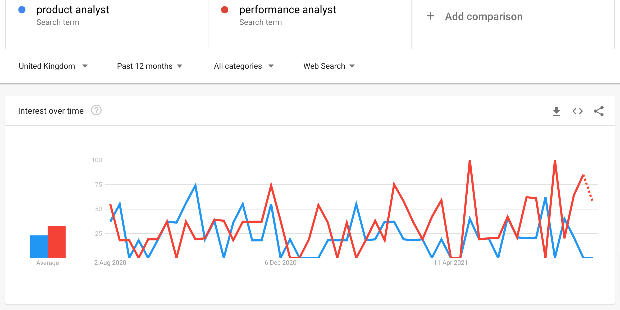

Read More » Performance analyst / Product Analyst?

Performance analyst / Product Analyst?I enjoyed reading Kate Gallo’s overview of the role of Product Analysts. It caused me to reflect on work I did regarding very similar roles when I was the Head…

Read More » Digital multi-hyphenates

Digital multi-hyphenatesMatt Jukes’ recent post on Multi-hyphenates got a lot of comments on Twitter and certainly struck a note with me. Like Matt, I started my ‘digital’ journey in an earlier…

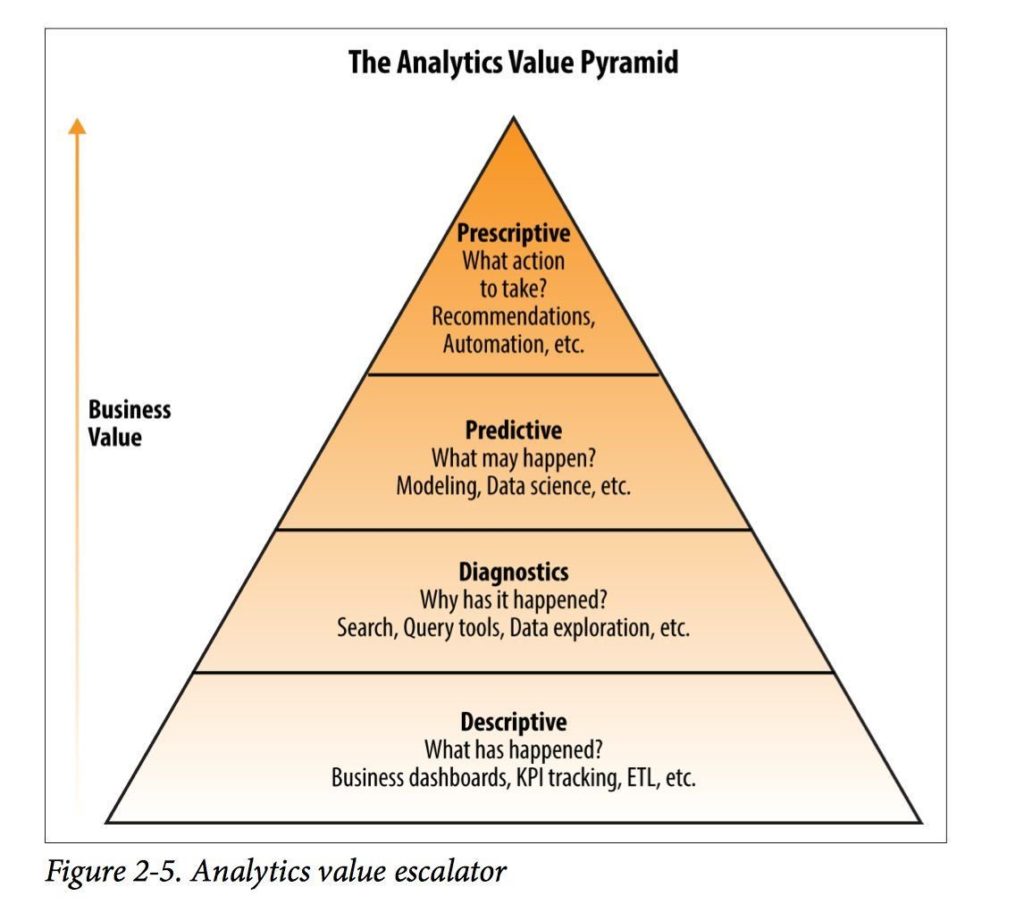

Read More » Some thoughts on data and smart analytics

Some thoughts on data and smart analyticsI was asked to do some briefing notes about data and smart analytics for a strategy paper. I thought I’d share them here. High-quality government data is important for: measuring…

Read More » GP patient numbers in Winchester and open data

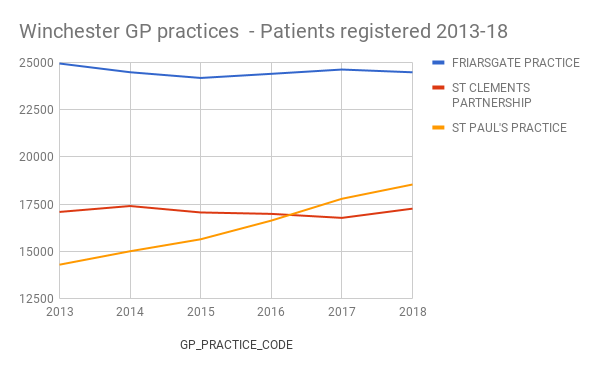

GP patient numbers in Winchester and open dataMy GP’s surgery, St Paul’s in Winchester, has an adjacent pharmacy and small private car park. It’s always been very busy, but recently I’ve found myself queuing to get a…

Read More » Being more precise about date formats

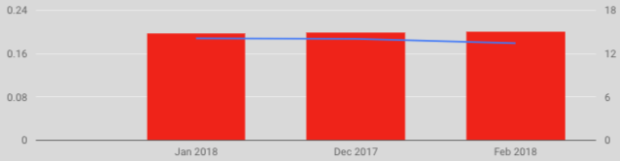

Being more precise about date formatsI’ve been working with Data Studio today and realised it interprets dates a bit more strictly than charts in Sheets. I thought I’d share what I did – and its…

Read More »